Fast, private email hosting for you or your business. Try Fastmail free for up to 30 days.

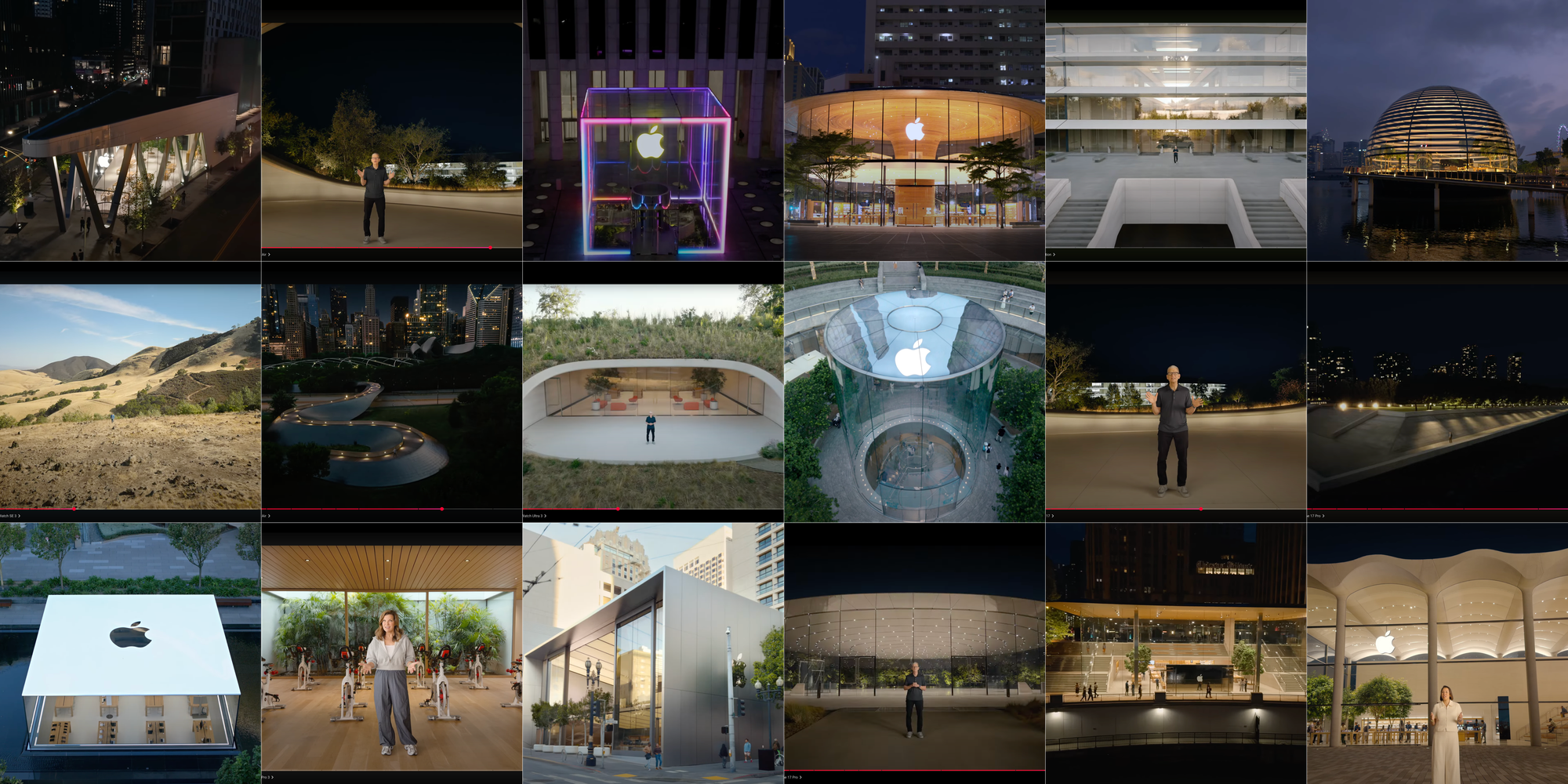

Apple Store (and More) Locations from September’s ‘Awe Dropping’ Event

It started with my question to a group of retired Apple friends as we watched the Apple September event:

I missed which Apple Store Kaiann is at; anyone know?

One friend guessed Dubai, suggesting the establishing shots “looked like the Middle East,” but I was doubtful Apple would fly one of its VPs to the Middle East for a short video shoot.

Besides, those opening shots kind of looked like Miami Beach to me, if I could trust my years of watching Miami Vice and Burn Notice. So I went through that segment again and spotted two landmarks: The Carlyle and the Leslie Hotel. A search in Maps confirmed those were in Miami Beach (yay TV!), and a check on Apple’s Find a Store page narrowed it down quickly to Apple Aventura.

Now I was curious: Could I identify all of the Apple stores shown in the video?

And just like that, a quest was born.

I expected it would be a chore—rewarding, but still. Finding Aventura involved scrubbing through that section of the video to identify landmarks, then combing through a list of possible stores until I found a matching photo.

As I was familiar with only a handful of Apple stores, and I wasn’t sure how many were shown during the event, it took a few days to properly motivate myself to do a full rewatch, dreading, as I was, the prospect of laboriously scrubbing through an hour-plus video, painstakingly identifying any tiny detail that would finally unlock the mystery of each location.

I needn’t have worried.

There were only a handful of stores Apple people spoke from. Some stores were gimmes. Others were either iconic enough or had easily discernible landmarks, making them (relatively) easy to find.

But the biggest reason this exercise was not the slog I anticipated was ChatGPT. In April, OpenAI released an update that added image analysis, giving ChatGPT the ability to deduce locations from images. I was impressed (and scared) by it then, and its performance here was remarkable, nailing 17 of 20 locations (85%) on the first try, and identifying the rest through extended prompting. (When it was wrong, though, it was hilariously wrong.)

Regardless of the ease or difficulty in finding the right store, once found, it was immediately identifiable: the architecture of each store was unique and unmistakable—and, of course, stunningly beautiful.

The process

The workflow for identifying locations was straightforward, if manual: I paused the video and took screenshots of each location, which I then fed into ChatGPT. For each location, I first tried using my own knowledge, plus landmark clues, to identify it.

There were four types of locations I identified (though I’d started this quest with only the first one in mind):

- Apple Store locations with presenters (five stores).

- Non-Apple Store locations with presenters (four spots).

- Apple Store locations in flyovers/beauty shots (seven stores).

- Apple Park locations (four locations).

I easily identified all of the Apple Park locations, having worked for several years in and around the campus. Likewise, I’ve spent countless hours at Apple Park Visitor Center in Cupertino and Union Square in San Francisco.

The Cube at Fifth Avenue is iconic, and the Chicago skyline and the Tribune building behind Michigan Avenue made that a quick find (I’ve also visited both).

(It turns out all of the Apple Store presentation locations were in major American cities: New York, San Francisco, Cupertino, Chicago, and Miami. Having seen those skylines or views hundreds of times helped narrow the field of search considerably.)

The flyovers and non-store locations were the toughest; I relied almost exclusively on ChatGPT here, validating each answer independently through online searches (including Apple’s Find a Store).

In some cases, ChatGPT identified stores confidently but incorrectly. Fortunately, it was easy enough to check and inform it when it was wrong. (It was fun watching it spin its wheels.)

The locations

I’ve timestamped each segment to the event video, and linked to the Apple Store where relevant. For non-Apple Store locations, I’ve linked to a reference site.

I’m about 99% confident in my results. If I got something wrong (or somehow missed a location), please let me know! I’m on Mastodon (preferred) and Bluesky, or you can email me.

On to the locations!

Flyovers

These are beauty shots, no Apple presenters. All are from the first few minutes and are on-screen for just a second or two; you can watch straight through from 1:17 until Tim Cook starts to speak.

- 1:17 - Pudong, China

- 1:18 - Al Maryah Island, Abu Dhabi

- 1:20 - Fifth Avenue, New York

- 1:21 - Marina Bay Sands, Singapore

- 1:22 - Central World, Bangkok

- 1:23 - Zorlu Center, Istanbul

- 1:25 - Pudong, China (again)

- 1:26 - Apple Park, Cupertino

Apple Stores

These are locations with an Apple presenter. I’ve included the person and their segment.

- 4:50 - Union Square, San Francisco (Kate Bergeron, AirPods Pro 3)

- 17:26 - Apple Park Visitor Center, Cupertino (rear; Stan Ng, Apple Watch)

- 19:12 - Apple Park Visitor Center, Cupertino (upper pavilion; Dr. Sumbul Ahmad Desai, Health)

- 23:04 - Apple Park Visitor Center, Cupertino (rear side; Amanda Santangelo, Apple Watch SE)

- 31:11 - Aventura, Florida (Kaiann Drance, iPhone 17)

- 42:56 - Michigan Avenue, Chicago (John Ternus, iPhone Air)

- 56:54 - Downtown Brooklyn, New York (Joz, iPhone 17 Pro)

Apple Park

Spots in and around Apple Park, Cupertino with an Apple presenter. I’ve included the person and their segment.

- 1:30 - Transit Center, Entrance 2 (Tim Cook, intro)

- 9:42 - Fitness Center (Julz Arney, AirPods Pro 3 fitness)

- 12:55 - Steve Jobs Theater (Tim Cook, Apple Watch intro)

- 29:16 - The Observatory (facing in; Tim Cook, iPhone 17 intro)

- 40:20, 53:48 - The Observatory (facing out; Tim Cook, iPhone Air and iPhone 17 Pro intros)

- 1:10:34 - Steve Jobs Theater (Tim Cook, outro)

Non-Apple Locations

Locations with an Apple presenter. I’ve included the person and their segment.

- 25:29 - Black Diamond Mines Regional Preserve, CA (Eugene Kim, Apple Watch Ultra)

- 35:43 - Miami Beach, FL (Megan Nash, iPhone 17 cameras)

- 44:52 - BP Pedestrian Bridge at Millennium Park, Chicago (Tim Millet, iPhone Air’s Apple silicon)

- 1:01:37 - FDR Four Freedoms State Park, New York City/Roosevelt Island (Patrick Carroll, iPhone 17 Pro camera)

Final thoughts

To wrap this up, some thoughts on the Visitor Center, using ChatGPT, the one spot I can’t confidently pin down, and a brief note of thanks for a couple of the tools I used to put this together .

Staging Apple Park Visitor Center

This section caught my attention. Behind Stan Ng you can see product tables, a product wall, and a Genius-like bar with stools. None of that is normally there: that section of the store usually holds an Augmented Reality model of Apple Park and has a blank back wall. At first I thought it was a composite shot, but you can see the depth of the (physical) shelves at 23:04. The space was completely redressed just for this video.

ChatGPT

I’d rate ChatGPT’s performance highly, but when it failed, it failed hard. There are plenty of juicy nuggets in those failures, but in the interest of space, I’ll limit it to a few highlights:

- It initially identified Apple Al Maryah Island in Abu Dhabi as Apple Dubai Mall, stating confidently: “The golden carbon-fiber roof, the dramatic water features cascading down both sides of the approach, and the positioning between the two massive hotel/office towers make it unmistakable. This store is famous for its kinetic ‘Solar Wings’ that open to provide shade and views of the Dubai Fountain. […] I’d put it at 95% confidence.” It assuredly noted that “The water-stepped plaza and the gold roof are signature features of Apple Dubai Mall.”

- It wrongly identified Apple Aventura in Florida as Apple BKC in Mumbai (“Here’s why this is unmistakable”, with a 100% confidence level); Apple Saket (“Here’s why Saket makes sense and BKC doesn’t”; confidence level 95%); Al Maryah Island (“Here’s why this is finally correct,” citing the “egg-shaped bollards lining the approach” which apparently are “unique to this location’s waterside plaza”, with 100% confidence); Central World (“it finally clicks” because of the “distinctive ‘lotus petal’ roof pattern”) with 100% confidence (“this time I triple-checked the architecture against Apple’s site photos”). It then cycled through Apple Tower Theatre, Bağdat Caddesi, Marina Bay Sands, Via del Corso, The Grove, Emaar Square Mall (it appears there’s no such store), Brickell City Centre, Omotesando, Jing’an, and Piazza Liberty, considering then dismissing each in turn, before finally settling on the right store. Quite the global tour.

- For Apple Downtown Brooklyn, it incorrectly guessed Tower Theatre (“wait no, wrong coast”), Williamsburg, Upper East Side, landing eventually on the right store, but the wrong address. When I pointed that out, it spiraled, again guessing Williamsburg, “Apple Upper West Side’s little sibling” (what?), a couple of seemingly nonexistent stores (“The triangular plan, diagonal steel supports, and the elevated plaza setting are unmistakable: this is Apple Store, Washington Heights” and Pittsburgh/Carnegie Mellon), before finally returning both the correct store and the correct address.

Black Diamond Mines Regional Preserve

This is the one spot I have very little confidence is right. I’m comfortable stating it’s in Northern California, in the East Bay hills… and that’s it. I’m relying 100% on ChatGPT’s assessment here, but it’s worth noting that it first suggested Mount Diablo State Park. Both seem plausible. If you know the spot, please get in touch!

Tools

Most of the process of putting this piece together was in the writing and verification, but I wanted to highlight two tools that made things easier: BBEdit and Retrobatch.

BBEdit was critical in creating the timestamped links, taking a list of times, locations, and URLs that looked like this:

1:17 - Pudong, China: https://www.apple.com.cn/retail/pudong/

1:18 - Al Maryah Island, Abu Dhabi: https://www.apple.com/ae/retail/almaryahisland/

…

And converting it to Markdown (using grep) that looked like this:

- [1:17](https://www.youtube.com/watch?v=H3KnMyojEQU&t=1m17s) - [Pudong][pudong], China

- [1:18](https://www.youtube.com/watch?v=H3KnMyojEQU&t=1m18s) - [Al Maryah Island][AlMaryahIsland], Abu Dhabi

…

It also made it easy to sort the locations alphabetically and by timestamp so I could include the tl;dr below. I’ve been using BBEdit for most of its 30+ year history. It’s literally the first app I install on any new Mac.

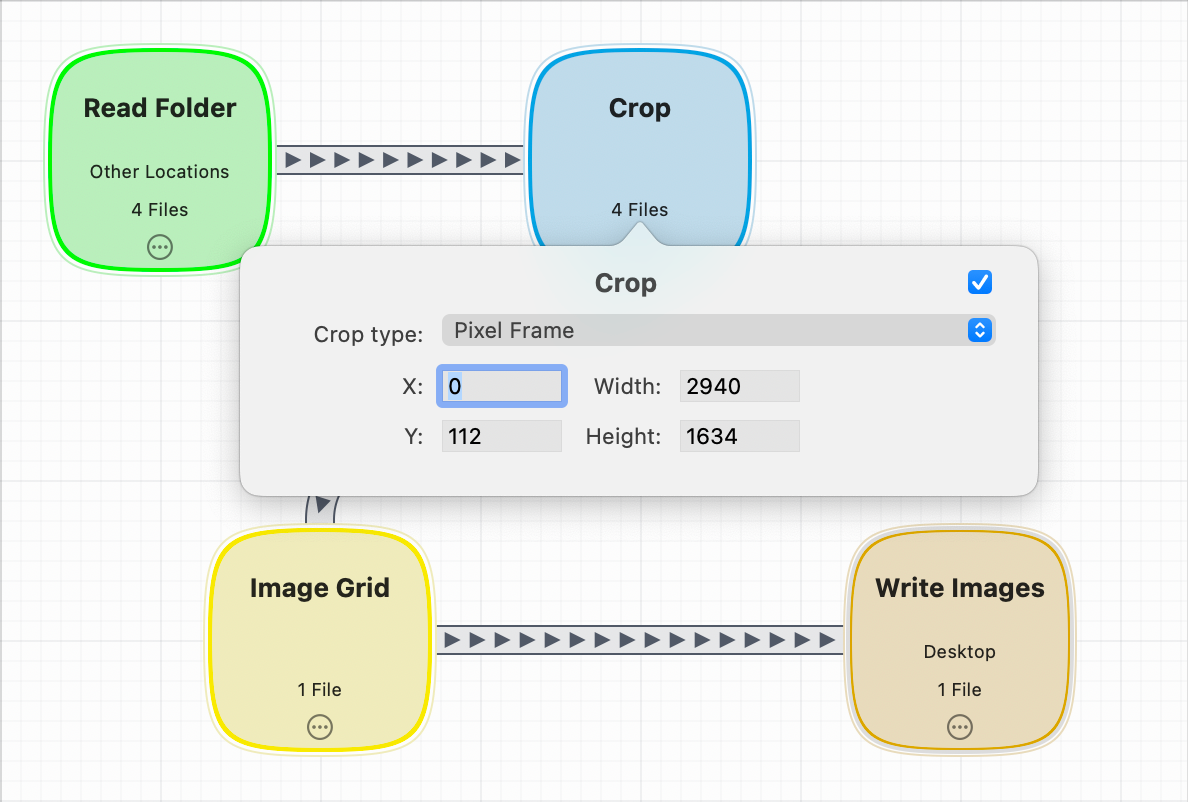

The second tool that proved invaluable was Retrobatch. Each screenshot was taken from YouTube in full screen mode, which shows the video title at the top and playback controls at the bottom. As those elements never shift, Retrobatch could crop each image at specific coordinates to remove those areas (and to make grids, which I then further edited using Acorn, from the same developer).

BBEdit and Retrobatch (and Acorn) are created by small, indie developers, and are among the best apps on the platform.

TL;DR

If you’re just looking for a list of the stores featured in the video, here’s a handy, alphabetical list:

- Al Maryah Island, Abu Dhabi

- Apple Park Visitor Center, Cupertino

- Aventura, Florida

- Central World, Bangkok

- Downtown Brooklyn, New York

- Fifth Avenue, New York

- Marina Bay Sands, Singapore

- Michigan Avenue, Chicago

- Pudong, China

- Union Square, San Francisco

- Zorlu Center, Istanbul

And here’s each location in the order they were in the video:

- 1:17 - Pudong, China

- 1:18 - Al Maryah Island, Abu Dhabi

- 1:20 - Fifth Avenue, New York

- 1:21 - Marina Bay Sands, Singapore

- 1:22 - Central World, Bangkok

- 1:23 - Zorlu Center, Istanbul

- 1:25 - Pudong, China (again)

- 1:26 - Apple Park, Cupertino

- 1:30 - Transit Center, Entrance 2 (Tim Cook, intro)

- 4:50 - Union Square, San Francisco (Kate Bergeron, AirPods Pro 3)

- 9:42 - Fitness Center (Julz Arney, AirPods Pro 3 fitness)

- 12:55 - Steve Jobs Theater (Tim Cook, Apple Watch intro)

- 17:26 - Apple Park Visitor Center, Cupertino (rear; Stan Ng, Apple Watch)

- 19:12 - Apple Park Visitor Center, Cupertino (upper pavilion; Dr. Sumbul Ahmad Desai, Health)

- 23:04 - Apple Park Visitor Center, Cupertino (rear side; Amanda Santangelo, Apple Watch SE)

- 25:29 - Black Diamond Mines Regional Preserve, CA (Eugene Kim, Apple Watch Ultra)

- 29:16 - The Observatory (facing in; Tim Cook, iPhone 17 intro)

- 31:11 - Aventura, Florida (Kaiann Drance, iPhone 17)

- 35:43 - Miami Beach, FL (Megan Nash, iPhone 17 cameras)

- 40:20 - The Observatory (facing out; Tim Cook, iPhone Air intro)

- 42:56 - Michigan Avenue, Chicago (John Ternus, iPhone Air)

- 44:52 - BP Pedestrian Bridge at Millennium Park, Chicago (Tim Millet, iPhone Air’s Apple silicon)

- 53:48 - The Observatory (facing out; Tim Cook, iPhone 17 Pro intro)

- 56:54 - Downtown Brooklyn, New York (Joz, iPhone 17 Pro)

- 1:01:37 - FDR Four Freedoms State Park, New York City/Roosevelt Island (Patrick Carroll, iPhone 17 Pro camera)

- 1:10:34 - Steve Jobs Theater (Tim Cook, outro)